Workflows for plan, apply, and destroy

Last year we announced Gruntwork Pipelines, a CI / CD pipeline that automates updates to your infrastructure. Up to now, the pipeline detected additions and changes to your infrastructure. We’ve now added support for automatically destroying resources! The pipeline detects when you’ve deleted a module folder and automatically runs destroy on that module.

I’ll do a quick walkthrough of how Gruntwork Pipelines works with Terraform, showing how applyworks, and introducing the new destroyfunctionality. After that, I’ll show you how to get started.

Here’s a quick video demo of Gruntwork Pipelines in action:

A Gruntwork Pipelines Design Primer

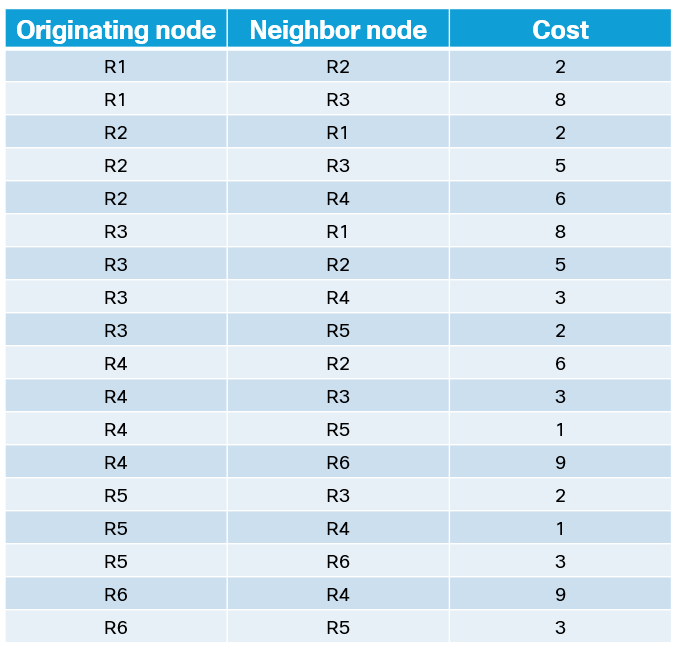

The CI / CD stack is comprised of the CI server you’re using (e.g., CircleCI, Jenkins, GitLab) plus the ECS Deploy Runner, which is an ECS Fargate Task that runs the actual deployment, with a Lambda function to trigger it.

You configure your CI system to execute the ECS Deploy Runner using that CI system’s config file. You can configure arbitrary infrastructure workflows. For example, when using Terraform and Terragrunt, you can configure a plan and apply workflow.

In the typical workflow, commits to the infrasctructure repo trigger aterragrunt plan(or terraform plan if the repo doesn’t use Terragrunt). Commits to the default (i.e. main) branch trigger terragrunt plan(or terraform plan) followed by an approval step where you can check the plan. If you approve, it triggers terragrunt apply(or terraform apply) so that real changes to the infrastructure apply only when merged into main.

We use the ECS Deploy Runner as a bastion to execute the actions that change infrastructure rather than directly run terraform / terragrunt on the CI server, to enforce the principle of least privilege and to guard against threats to infrastructure. You can read more about the threat model of any CI / CD solution, and how Gruntwork uniquely addresses these problems.

The CI / CD Workflow: Apply Changes

Let’s say you have a terraform module that deploys some resource. Whenever you need to deploy a change to the configuration of that module, you can rely on the pipeline to automatically deploy those changes live for you.

- Make a change to your Terraform or Terragrunt code. Save that change, commit it, and push it to a new branch. Open a pull request for that branch.

- The CI server picks up on that new pull request and runs

terragrunt plan(orterraform plan) and any tests that are also configured. - Once the plan is complete, you manually check the output of that plan by opening up the job and scrolling through the logs that show the

planoutput. It’s important to check the plan output to make sure the changes make sense for your application. - If the plan looks good, get approval to merge that pull request into

main. - The CI server detects that

mainhas received a new commit and runsterragrunt plan(orterraform plan) along with tests. - It then holds the job and waits for manual approval to continue further.

- You recheck the plan and click the button to approve the job.

- The CI server now runs

terragrunt apply(orterraform apply). Your changes are now applied to the live infrastructure.

Here’s a live walkthrough video of applying changes to infrastructure. The example it uses is adding the sample applications that were previously destroyed, and the same workflow applies to all module changes (that aren’t destroys).

The CI / CD Workflow: Destroy

Now let’s say you’d like to delete a module. Before, there wasn’t a way to automatically destroy infrastructure. With this update, you can now trigger a destroy workflow by removing the corresponding folder! Let’s see what happens when we do that.

- Remove the module folder that contains the

terragrunt.hclfile from your repository. Commit the change, push it to a new branch, and open a pull request. (If you’re not using Terragrunt, you can remove the module folder that contains the terraform code.) - The CI server picks up on that new pull request and runs

terragrunt plan -destroy(orterraform plan -destroy) and any tests that are also configured. - Again, you manually check the output of that destroy plan by opening up the job and scrolling through the logs. You should see several resources slated for destruction.

- If the plan looks good, get approval to merge that pull request into

main. - The CI server detects that

mainhas received a new commit and runsterragrunt plan -destroy(orterraform plan -destroy) along with tests. - It then holds the job and waits for manual approval to continue further.

- You recheck the plan and click the approval button.

- The CI server now runs

terragrunt apply -destroy(orterraform apply -destroy). Those resources are now destroyed from the live infrastructure.

These steps are also described in our example Reference Architecture documentation, which you can find here.

Check out this video that walks you through a live destroy. It shows a practical use case for Gruntwork Reference Architecture users who eventually want to remove their sample applications.

How do I get started?

Existing users of the Gruntwork Reference Architecture, which comes with a Terragrunt-based infrastructure-live repository that uses the Gruntwork AWS Service Catalog, will now be able to destroy infrastructure using the automated CI / CD pipeline by upgrading their architecture code. Subscribers to the Gruntwork IaC library without a Reference Architecture also have access to all the code to the Gruntwork Pipelines solution, including the new destroy support, within the IaC Library’s CI repository. Users without a Reference Architecture can deploy the pipeline following this guide on our website.

How do I upgrade to get destroy?

If you have a Gruntwork Reference Architecture or Gruntwork Pipelines solution in place, you can get support for destroy by following the migration guide posted in terraform-aws-service-catalog and in terraform-aws-cis-service-catalog. At a high level, you’ll need to do the following:

- Pull in changes to the

infrastructure-liverepo’s CI server config and CI scripts. - Rebuild AMIs for the

ecs-deploy-runnerso that these images include the new versions ofterraform-aws-cicode. - Redeploy the

ecs-deploy-runnerin all environments.

What’s next?

Today the Gruntwork Pipelines solution destroys modules one folder at a time. We would love to add the ability to run-all destroy as well as run-all apply so that users can easily spin whole environments up and down. We’ve created an issue to track work on this.

Getting access

If you want to set up a Gruntwork Pipelines solution, follow along with our Gruntwork Pipelines guide.

If you don’t have a Reference Architecture, talk to us about getting yourself one, or follow our guides to deploy one yourself.