Learn about 10 problems that have been fixed in Terraform since the 2nd edition

I’m excited to announce that the early release of the 3rd edition of Terraform: Up & Running is now available! The 2nd edition came out in 2019 and it is remarkable how much has changed since then: Terraform went through five major releases (0.13, 0.14, 0.15, 1.0, and 1.1), most Terraform providers went through several major upgrades of their own (e.g., the AWS provider went from 2.0 to 3.0 and 4.0), and the Terraform community has continued to grow at a frantic pace, which has led to the emergence of many new best practices, tools, and modules.

I’ve tried to capture all these new developments in the 3rd edition of the book, adding 100 pages (!) of new content on top of the 2nd edition, including two totally new chapters, plus major updates to all the existing chapters. To give you a preview of all this new content, I’ll do a quick walkthrough of the top 10 problems that have been fixed in Terraform over the last few years.

This is a two part blog post series. In the first part of the series (this blog post), I’ll go into detail on the following 5 problems and their solutions, based on snippets from the 3rd edition of the book:

- Multiple regions, accounts, and clouds: multi-region replication, plus an example of using Terraform and Kubernetes to deploy Docker containers.

- Provider versioning:

required_providersand the lock file. - Secrets management: examples of using different types of secret management tools (e.g., Vault, KMS, etc) with Terraform.

- CI / CD security: examples of using OIDC and isolated workers to set up a secure CI / CD pipeline for Terraform.

- Module iteration: examples of using

countandfor_eachto do loops and conditionals withmoduleblocks.

In the second part of the series, which will come out when the final version of the 3rd edition is published, I’ll cover 5 more problems and solutions, including input validation, refactoring, testing, policy enforcement, and maturity. You can get access to the full version of all of this content now on the O’Reilly website!

Multiple regions, accounts, and clouds

The problem

Just about all of the code examples in the 2nd edition of the book used a single region in a single account of a single cloud (AWS). But what if you wanted to deploy into multiple regions? Or multiple accounts? Or multiple clouds (e.g., AWS, GCP, and Azure)?

The solution

To answer these questions, the 3rd edition of the book includes a brand new chapter: Chapter 7, Working with Multiple Providers. This chapter shows how to work with multiple Terraform providers to deploy to multiple regions, multiple accounts, and multiple clouds.

To deploy into multiple regions or accounts, you create multiple copies of the same provider, each configured differently, and each with a unique alias parameter. You then tell resources and modules which provider alias to use via the provider and providers parameters, respectively. To see this in action, here’s a code snippet from Chapter 7 that shows how to use multiple provider blocks with a mysql module to deploy MySQL with multi-region replication:

# Configure one provider to deploy into us-east-2

provider "aws" {

region = "us-east-2"

alias = "primary"

}# Configure another provider to deploy into us-west-1

provider "aws" {

region = "us-west-1"

alias = "replica"

}# Deploy the MySQL primary in us-east-2

module "mysql_primary" {

source = "../../../../modules/data-stores/mysql" providers = {

aws = aws.primary

} db_name = var.db_name

db_username = var.db_username

db_password = var.db_password # Must be enabled to support replication

backup_retention_period = 1

}# Deploy a MySQL replica in us-west-1

module "mysql_replica" {

source = "../../../../modules/data-stores/mysql" providers = {

aws = aws.replica

} # Make this a replica of the primary

replicate_source_db = module.mysql_primary.arn

}

To deploy into multiple clouds, you create multiple copies of different providers. Readers of the first two editions of this book often asked for examples of how to work with multiple clouds (e.g., AWS, Azure, GCP), but I struggled to find an example where it was practical to do this in a single module. Here’s why:

At a high level, there are two opposite extremes in how companies approach multi-cloud:

- Transparent portability: With this approach, the idea is to try to use all the clouds as one unified computing platform, abstracting away all the differences between cloud providers to make it easier to migrate a workload from one cloud to the other.

- Cloud native: With this approach, the idea is to try to use each cloud independently, leveraging its unique services as much as possible.

In my experience, the transparent portability approach is much less effective. It’s too focused on chasing the purported benefits of multi-cloud—avoiding vendor lock-in, reduced pricing, increased resiliency—that, as I discuss in Chapter 7, are mostly myths, and don’t pay off for most companies. Even more importantly, the desire for transparent portability vastly underestimates the significant costs of trying to paper over the differences between clouds: the offerings from each cloud may look superficially similar—e.g., they all offer virtual machines—but under the hood, there are many differences, including significant variation in the mechanics of authentication, authorization, networking, data storage, replication, partitioning, secrets management, compliance, security model, performance, latency, availability, scalability, limits/throttles, support, and much else.

Therefore, except for a few niche cases, I recommend the cloud native approach. This is also the approach that Terraform is designed for: you can use Terraform with multiple clouds, but you have to write separate code for each cloud, using the providers and resources native to that cloud. Therefore, even for multi-cloud deployments, it’s unusual to build a single Terraform module that deploys into multiple clouds (that is, uses multiple different providers in one module); it’s much more common to keep the code for each cloud in separate modules.

So, instead of adding an unrealistic multi-cloud example, the 3rd edition of the book includes an example of how to use multiple different providers together in a more realistic scenario: namely, how to use the AWS provider with the Kubernetes provider to deploy Dockerized apps on Amazon EKS.

Chapter 7 includes a crash course on Docker, Kubernetes, and EKS, and by the end of the chapter, you will have built several modules that allow you to use the following simple code to spin up an EKS cluster and deploy a web app into it:

# Use the AWS provider to deploy into the AWS us-east-2 region

provider "aws" {

region = "us-east-2"

}# Use the Kubernetes provider to deploy into an EKS cluster

provider "kubernetes" {

host = module.eks_cluster.cluster_endpoint

cluster_ca_certificate = base64decode(

module.eks_cluster.cluster_certificate_authority[0].data

)

token = data.aws_eks_cluster_auth.cluster.token

}data "aws_eks_cluster_auth" "cluster" {

name = module.eks_cluster.cluster_name

}# Deploy an EKS cluster

module "eks_cluster" {

source = "../../modules/services/eks-cluster" name = "example-eks-cluster"

min_size = 1

max_size = 2

desired_size = 1

instance_types = ["t3.small"]

}# Deploy a simple web app into the EKS cluster using a

# Kubernetes Deployment

module "simple_webapp" {

source = "../../modules/services/k8s-app" name = "simple-webapp"

image = "training/webapp"

replicas = 2

container_port = 5000 depends_on = [module.eks_cluster]

}

Provider versioning

The problem

Terraform providers change all the time, and sometimes in backward incompatible ways. How do you ensure that all of your team members and your CI servers are using the same provider versions everywhere?

The solution

To solve this problem, Terraform 0.13 introduced the required_providers block and Terraform 0.14 introduced the lock file, both of which are now covered in Chapter 8, Production Grade Terraform Code. The required_providers block allows you to specify which providers your code depends on, where to download the code for that provider, and the version constraints to enforce:

terraform {

required_providers {

# Use any 4.x version of the AWS provider

aws = {

source = "hashicorp/aws"

version = "~> 4.0"

} # Use the Kubernetes provider at any version at or above 2.7

kubernetes = {

source = "hashicorp/kubernetes"

version = ">= 2.7.0"

}

}

}

The first time you run terraform init, Terraform will download the provider code you’ve specified in the required_providers block and record the exact versions it downloaded in a .terraform.lock.hcl file:

provider "registry.terraform.io/hashicorp/aws" {

version = "4.2.0"

constraints = "~> 4.0"

hashes = [

"h1:7xPC2b+Plr514HPRf837t3YFzlSSIY03StrScaVIfw0=",

"h1:N5oVH/WT+1U3/hfpqs2iQ6wkoK+1qrPbYZJ+6Ptx6a0=",

"h1:qfnMtwFbsVJWvzxUCajm4zUkjEH9GDdT3FFYffEEhYQ=",

"h1:s29zqs8kRCi7hFmF2IWn0OAsVrh+oEyOzB1W/aeeQ3I="

]

}provider "registry.terraform.io/hashicorp/kubernetes" {

version = "2.8.0"

constraints = "~> 2.0"

hashes = [

"h1:LZLKGKTlBmG8jtMBdZ4ZMe+r15OQLSMYV0DktfROk+Y=",

"h1:UZCCMTH49ziz6YDV5oCCoOHypOxZWvzc59IfZxVdWeI=",

"h1:aU9axQagkwAmDUVqRY71UU1kgjBrgFKQYgpAhqQnOEk=",

"h1:tfU8BStZIt2d6KIGTRNjWb09zeVzh3UFGNRGVgFce+A="

]

}

If you check this lock file into version control, any team member or CI server that runs init will end up downloading the exact same versions of the provider code, so there’s no chance of pulling in newer (possibly backward incompatible) versions by accident. Chapter 8 also includes new examples of how to use tools like tfenv and tgswitch to manage Terraform and Terragrunt versions.

Secrets management

The problem

When working with Terraform code, you often have to manage secrets, such as API keys and database passwords. How do you handle these secrets without storing them in plain text or leaking sensitive data into your logs?

The solution

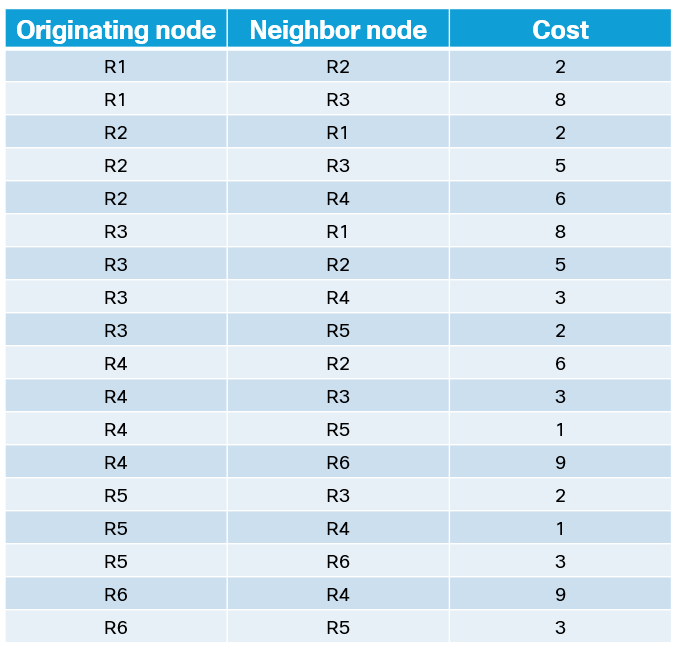

The 3rd edition contains an entirely new chapter to answer this question: Chapter 6, Managing Secrets with Terraform. This chapter includes an overview of secrets management and a comparison of a variety of secret management tools:

Chapter 6 also has examples of how to use several types of secret stores with Terraform, including how to pass secrets from a personal secrets manager such as 1Password to Terraform, how to read secrets from files encrypted with KMS, and the example below, which shows how to read database credentials from AWS Secrets Manager:

# Read database credentials from AWS Secrets Manager

data "aws_secretsmanager_secret_version" "creds" {

secret_id = "db-creds"

}locals {

# The credentials are stored in JSON, so we need to decode them

db_creds = jsondecode(

data.aws_secretsmanager_secret_version.creds.secret_string

)

}resource "aws_db_instance" "example" {

identifier_prefix = "terraform-up-and-running"

engine = "mysql"

allocated_storage = 10

instance_class = "db.t2.micro"

skip_final_snapshot = true

db_name = "example-db" # Pass the secrets to the database

username = local.db_creds.username

password = local.db_creds.password

}

The entire book has also been updated to mark all input and output variables that could contain secrets with the new sensitive keyword, which was introduced in Terraform 0.14 and 0.15 to tell Terraform to never log these values, as they may contain sensitive data:

variable "db_username" {

description = "The username for the database"

type = string

sensitive = true

}variable "db_password" {

description = "The password for the database"

type = string

sensitive = true

}

CI / CD security

The problem

In order to effectively use Terraform as a team, you need to set up a CI / CD pipeline that runs plan and apply automatically on your code changes. To deploy arbitrary Terraform changes, your CI server needs arbitrary permissions—which is just another term for admin permissions. And that’s a big problem, because now you’re mixing the following ingredients all in one place:

- The CI server is typically accessible to every dev on your team.

- CI servers are designed to execute arbitrary code.

- The CI server now has admin permissions.

No matter how you slice it, this is a bad combination. In effect, what you’ve done is given every one of your developers admin permissions, plus exposed admin permissions to any snippet of code that happens to run on that server. Attackers are aware of this and are starting to target CI servers in particular (e.g., see 10 real-world stories of how we’ve compromised CI/CD pipelines).

The solution

There are several ingredients to setting up a secure CI / CD pipeline for Terraform. The first ingredient is to handle credentials on your CI server securely. The 3rd edition of the book adds examples of using environment variables, IAM roles, and arguably the most secure option of all, OpenID Connect (OIDC). Chapter 6 includes an example of using OIDC with GitHub Actions to authenticate to AWS, via an IAM role, without having to manage any credentials at all:

# Authenticate to AWS using OIDC

- uses: aws-actions/configure-aws-credentials@v1

with:

# Specify the IAM role to assume here

role-to-assume: arn:aws:iam::1234567890:role/example-role

aws-region: us-east-2# Run Terraform using HashiCorp's setup-terraform Action

- uses: hashicorp/setup-terraform@v1

with:

terraform_version: 1.1.0

terraform_wrapper: false

run: |

terraform init

terraform apply -auto-appro

The second ingredient is to strictly limit what the CI server can do once it has authenticated: for example, in the OIDC snippet above, you’ll want to severely limit the permissions in that IAM role. But then how do you handle the admin permissions you need to deploy arbitrary Terraform changes?

The answer is that instead of giving the CI server admin permissions, you isolate the admin permissions to a totally separate worker: e.g., a separate server, a separate container, etc. That worker should be extremely locked down, so no developers have access to it at all, and the only thing it allows is for the CI server to trigger that worker via an API that is designed to be extremely limited. For example, the API your worker exposes might only allow you to run specific commands (e.g., terraform plan and terraform apply), in specific repos (e.g., your live repo), in specific branches (e.g., the main branch), and so on.

This way, even if an attacker gets access to your CI server, they still won’t have access to the admin credentials, and all they can do is request a deployment on some code that’s already in your version control system. This is still not great, but it’s not nearly as much of a catastrophe as leaking the admin credentials fully. Check out Gruntwork Pipelines for an example implementation of both the OIDC and admin worker approach.

Module iteration

The problem

You built a module and you want to use it several times—in a loop, essentially—without having to copy and paste the code. However, Terraform 0.12 and below didn’t support count or for_each on module.

The solution

Terraform 0.13 added support for using count and for_each on module blocks, so Chapter 5, Terraform Tips and Tricks, has been updated to show you how to use loops and conditional logic with your modules.

For example, if you have an iam-user module that can create a single IAM user, you can use for_each on a module block to create 3 IAM users as follows:

locals {

user_names = ["neo", "trinity", "morpheus"]

}module "users" {

source = "../../../modules/landing-zone/iam-user" for_each = toset(local.user_names)

user_name = each.key

}

Learn more

You’ve now had a small taste of just 5 of the problems that have been solved in the Terraform world in the last few years and are now covered by the 3rd edition of Terraform: Up & Running, including how to work with multiple regions, accounts, and clouds, how to control your provider versions, how to manage secrets securely with Terraform, how to set up a secure CI / CD pipeline, and how to do control logic with modules.

In part 2 of this series I’ll cover 5 more problems and solutions, including:

- Variable validation. How to use

validationblocks to constrain the input variables your module accepts beyond basic type checks. - Refactoring. How to use

movedblocks to safely refactor your Terraform code without having to do state surgery manually. - Testing: How to use new testing techniques on your Terraform code, including static analysis (e.g.,

validate,tfsec,tflint) and server testing (e.g.,inspec,serverspec, andgoss). - Policy enforcement: How to enforce company policies compliance requirements using tools such as Terratest, OPA, and Sentinel.

- Maturity. How Terraform has become more stable due to the Terraform 1.0 release, the growth of the community, and the HashiCorp IPO.

If you’d like access to all of this content now, grab yourself a copy of the Terraform: Up & Running, 3rd edition early release! You can be one of the first to read it, even while the book is still being edited, and I’d be grateful for your feedback.